Online Inference with Ray Serve¶

You can run this notebook directly in

Colab.

For this chapter you need to install the following dependencies:

In [ ]:

Copied!

! pip install "ray[serve]==2.2.0" "transformers==4.21.2"

! pip install "requests==2.28.1" "wikipedia==1.4.0"

! pip install "ray[serve]==2.2.0" "transformers==4.21.2"

! pip install "requests==2.28.1" "wikipedia==1.4.0"

To import utility files for this chapter, on Colab you will also have to clone the repo and copy the code files to the base path of the runtime:

In [ ]:

Copied!

!git clone https://github.com/maxpumperla/learning_ray

%cp -r learning_ray/notebooks/* .

!git clone https://github.com/maxpumperla/learning_ray

%cp -r learning_ray/notebooks/* .

In [ ]:

Copied!

from ray import serve

from transformers import pipeline

@serve.deployment

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

def __call__(self, request) -> str:

input_text = request.query_params["input_text"]

return self._classifier(input_text)[0]["label"]

from ray import serve

from transformers import pipeline

@serve.deployment

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

def __call__(self, request) -> str:

input_text = request.query_params["input_text"]

return self._classifier(input_text)[0]["label"]

In [ ]:

Copied!

basic_deployment = SentimentAnalysis.bind()

basic_deployment = SentimentAnalysis.bind()

In [ ]:

Copied!

# Run this in a separate process to avoid any blocking:

! serve run --non-blocking app:basic_deployment

# Run this in a separate process to avoid any blocking:

! serve run --non-blocking app:basic_deployment

In [ ]:

Copied!

import requests

print(requests.get(

"http://localhost:8000/", params={"input_text": "Hello friend!"}

).json())

import requests

print(requests.get(

"http://localhost:8000/", params={"input_text": "Hello friend!"}

).json())

In [ ]:

Copied!

from fastapi import FastAPI

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@app.get("/")

def classify(self, input_text: str) -> str:

return self._classifier(input_text)[0]["label"]

fastapi_deployment = SentimentAnalysis.bind()

from fastapi import FastAPI

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@app.get("/")

def classify(self, input_text: str) -> str:

return self._classifier(input_text)[0]["label"]

fastapi_deployment = SentimentAnalysis.bind()

In [ ]:

Copied!

app = FastAPI()

@serve.deployment(num_replicas=2, ray_actor_options={"num_cpus": 2})

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@app.get("/")

def classify(self, input_text: str) -> str:

import os

print("from process:", os.getpid())

return self._classifier(input_text)[0]["label"]

scaled_deployment = SentimentAnalysis.bind()

app = FastAPI()

@serve.deployment(num_replicas=2, ray_actor_options={"num_cpus": 2})

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@app.get("/")

def classify(self, input_text: str) -> str:

import os

print("from process:", os.getpid())

return self._classifier(input_text)[0]["label"]

scaled_deployment = SentimentAnalysis.bind()

In [ ]:

Copied!

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@serve.batch(max_batch_size=10, batch_wait_timeout_s=0.1)

async def classify_batched(self, batched_inputs):

print("Got batch size:", len(batched_inputs))

results = self._classifier(batched_inputs)

return [result["label"] for result in results]

@app.get("/")

async def classify(self, input_text: str) -> str:

return await self.classify_batched(input_text)

batched_deployment = SentimentAnalysis.bind()

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@serve.batch(max_batch_size=10, batch_wait_timeout_s=0.1)

async def classify_batched(self, batched_inputs):

print("Got batch size:", len(batched_inputs))

results = self._classifier(batched_inputs)

return [result["label"] for result in results]

@app.get("/")

async def classify(self, input_text: str) -> str:

return await self.classify_batched(input_text)

batched_deployment = SentimentAnalysis.bind()

In [ ]:

Copied!

import ray

from ray import serve

from app import batched_deployment

handle = serve.run(batched_deployment)

ray.get([handle.classify.remote("sample text") for _ in range(10)])

import ray

from ray import serve

from app import batched_deployment

handle = serve.run(batched_deployment)

ray.get([handle.classify.remote("sample text") for _ in range(10)])

In [ ]:

Copied!

@serve.deployment

class DownstreamModel:

def __call__(self, inp: str):

return "Hi from downstream model!"

@serve.deployment

class Driver:

def __init__(self, downstream):

self._d = downstream

async def __call__(self, *args) -> str:

return await self._d.remote()

downstream = DownstreamModel.bind()

driver = Driver.bind(downstream)

@serve.deployment

class DownstreamModel:

def __call__(self, inp: str):

return "Hi from downstream model!"

@serve.deployment

class Driver:

def __init__(self, downstream):

self._d = downstream

async def __call__(self, *args) -> str:

return await self._d.remote()

downstream = DownstreamModel.bind()

driver = Driver.bind(downstream)

In [ ]:

Copied!

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self, inp: str):

return inp + "|" + self._my_val

@serve.deployment

class PipelineDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

intermediate = self._m1.remote("input")

final = self._m2.remote(intermediate)

return await final

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

pipeline_driver = PipelineDriver.bind(m1, m2)

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self, inp: str):

return inp + "|" + self._my_val

@serve.deployment

class PipelineDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

intermediate = self._m1.remote("input")

final = self._m2.remote(intermediate)

return await final

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

pipeline_driver = PipelineDriver.bind(m1, m2)

In [ ]:

Copied!

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self):

return self._my_val

@serve.deployment

class BroadcastDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

output1, output2 = self._m1.remote(), self._m2.remote()

return [await output1, await output2]

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

broadcast_driver = BroadcastDriver.bind(m1, m2)

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self):

return self._my_val

@serve.deployment

class BroadcastDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

output1, output2 = self._m1.remote(), self._m2.remote()

return [await output1, await output2]

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

broadcast_driver = BroadcastDriver.bind(m1, m2)

In [ ]:

Copied!

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self):

return self._my_val

@serve.deployment

class ConditionalDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

import random

if random.random() > 0.5:

return await self._m1.remote()

else:

return await self._m2.remote()

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

conditional_driver = ConditionalDriver.bind(m1, m2)

@serve.deployment

class DownstreamModel:

def __init__(self, my_val: str):

self._my_val = my_val

def __call__(self):

return self._my_val

@serve.deployment

class ConditionalDriver:

def __init__(self, model1, model2):

self._m1 = model1

self._m2 = model2

async def __call__(self, *args) -> str:

import random

if random.random() > 0.5:

return await self._m1.remote()

else:

return await self._m2.remote()

m1 = DownstreamModel.bind("val1")

m2 = DownstreamModel.bind("val2")

conditional_driver = ConditionalDriver.bind(m1, m2)

In [ ]:

Copied!

from typing import Optional

import wikipedia

def fetch_wikipedia_page(search_term: str) -> Optional[str]:

results = wikipedia.search(search_term)

# If no results, return to caller.

if len(results) == 0:

return None

# Get the page for the top result.

return wikipedia.page(results[0]).content

from typing import Optional

import wikipedia

def fetch_wikipedia_page(search_term: str) -> Optional[str]:

results = wikipedia.search(search_term)

# If no results, return to caller.

if len(results) == 0:

return None

# Get the page for the top result.

return wikipedia.page(results[0]).content

In [ ]:

Copied!

from ray import serve

from transformers import pipeline

from typing import List

@serve.deployment

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@serve.batch(max_batch_size=10, batch_wait_timeout_s=0.1)

async def is_positive_batched(self, inputs: List[str]) -> List[bool]:

results = self._classifier(inputs, truncation=True)

return [result["label"] == "POSITIVE" for result in results]

async def __call__(self, input_text: str) -> bool:

return await self.is_positive_batched(input_text)

from ray import serve

from transformers import pipeline

from typing import List

@serve.deployment

class SentimentAnalysis:

def __init__(self):

self._classifier = pipeline("sentiment-analysis")

@serve.batch(max_batch_size=10, batch_wait_timeout_s=0.1)

async def is_positive_batched(self, inputs: List[str]) -> List[bool]:

results = self._classifier(inputs, truncation=True)

return [result["label"] == "POSITIVE" for result in results]

async def __call__(self, input_text: str) -> bool:

return await self.is_positive_batched(input_text)

In [ ]:

Copied!

@serve.deployment(num_replicas=2)

class Summarizer:

def __init__(self, max_length: Optional[int] = None):

self._summarizer = pipeline("summarization")

self._max_length = max_length

def __call__(self, input_text: str) -> str:

result = self._summarizer(

input_text, max_length=self._max_length, truncation=True)

return result[0]["summary_text"]

@serve.deployment(num_replicas=2)

class Summarizer:

def __init__(self, max_length: Optional[int] = None):

self._summarizer = pipeline("summarization")

self._max_length = max_length

def __call__(self, input_text: str) -> str:

result = self._summarizer(

input_text, max_length=self._max_length, truncation=True)

return result[0]["summary_text"]

In [ ]:

Copied!

@serve.deployment

class EntityRecognition:

def __init__(self, threshold: float = 0.90, max_entities: int = 10):

self._entity_recognition = pipeline("ner")

self._threshold = threshold

self._max_entities = max_entities

def __call__(self, input_text: str) -> List[str]:

final_results = []

for result in self._entity_recognition(input_text):

if result["score"] > self._threshold:

final_results.append(result["word"])

if len(final_results) == self._max_entities:

break

return final_results

@serve.deployment

class EntityRecognition:

def __init__(self, threshold: float = 0.90, max_entities: int = 10):

self._entity_recognition = pipeline("ner")

self._threshold = threshold

self._max_entities = max_entities

def __call__(self, input_text: str) -> List[str]:

final_results = []

for result in self._entity_recognition(input_text):

if result["score"] > self._threshold:

final_results.append(result["word"])

if len(final_results) == self._max_entities:

break

return final_results

In [ ]:

Copied!

from pydantic import BaseModel

class Response(BaseModel):

success: bool

message: str = ""

summary: str = ""

named_entities: List[str] = []

from pydantic import BaseModel

class Response(BaseModel):

success: bool

message: str = ""

summary: str = ""

named_entities: List[str] = []

In [ ]:

Copied!

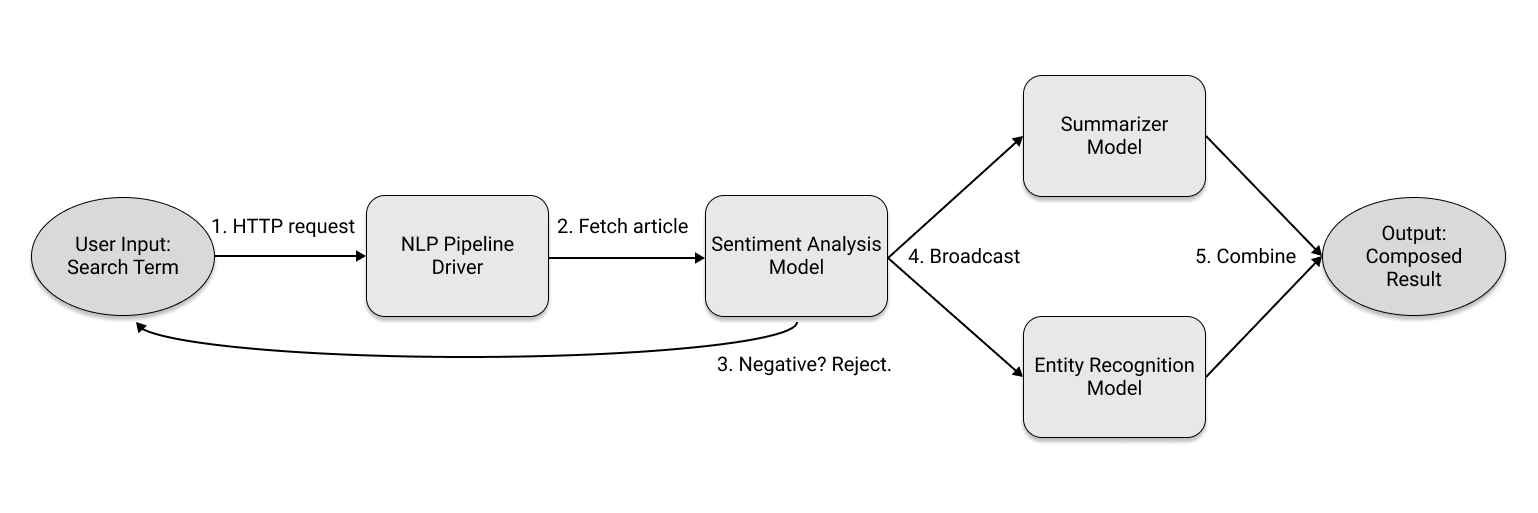

from fastapi import FastAPI

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class NLPPipelineDriver:

def __init__(self, sentiment_analysis, summarizer, entity_recognition):

self._sentiment_analysis = sentiment_analysis

self._summarizer = summarizer

self._entity_recognition = entity_recognition

@app.get("/", response_model=Response)

async def summarize_article(self, search_term: str) -> Response:

# Fetch the top page content for the search term if found.

page_content = fetch_wikipedia_page(search_term)

if page_content is None:

return Response(success=False, message="No pages found.")

# Conditionally continue based on the sentiment analysis.

is_positive = await self._sentiment_analysis.remote(page_content)

if not is_positive:

return Response(success=False, message="Only positivitiy allowed!")

# Query the summarizer and named entity recognition models in parallel.

summary_result = self._summarizer.remote(page_content)

entities_result = self._entity_recognition.remote(page_content)

return Response(

success=True,

summary=await summary_result,

named_entities=await entities_result

)

from fastapi import FastAPI

app = FastAPI()

@serve.deployment

@serve.ingress(app)

class NLPPipelineDriver:

def __init__(self, sentiment_analysis, summarizer, entity_recognition):

self._sentiment_analysis = sentiment_analysis

self._summarizer = summarizer

self._entity_recognition = entity_recognition

@app.get("/", response_model=Response)

async def summarize_article(self, search_term: str) -> Response:

# Fetch the top page content for the search term if found.

page_content = fetch_wikipedia_page(search_term)

if page_content is None:

return Response(success=False, message="No pages found.")

# Conditionally continue based on the sentiment analysis.

is_positive = await self._sentiment_analysis.remote(page_content)

if not is_positive:

return Response(success=False, message="Only positivitiy allowed!")

# Query the summarizer and named entity recognition models in parallel.

summary_result = self._summarizer.remote(page_content)

entities_result = self._entity_recognition.remote(page_content)

return Response(

success=True,

summary=await summary_result,

named_entities=await entities_result

)

In [ ]:

Copied!

sentiment_analysis = SentimentAnalysis.bind()

summarizer = Summarizer.bind()

entity_recognition = EntityRecognition.bind(threshold=0.95, max_entities=5)

nlp_pipeline_driver = NLPPipelineDriver.bind(

sentiment_analysis, summarizer, entity_recognition)

sentiment_analysis = SentimentAnalysis.bind()

summarizer = Summarizer.bind()

entity_recognition = EntityRecognition.bind(threshold=0.95, max_entities=5)

nlp_pipeline_driver = NLPPipelineDriver.bind(

sentiment_analysis, summarizer, entity_recognition)

In [ ]:

Copied!

# Run this in a separate process to avoid any blocking:

! serve run --non-blocking app:nlp_pipeline_driver

# Run this in a separate process to avoid any blocking:

! serve run --non-blocking app:nlp_pipeline_driver

In [ ]:

Copied!

import requests

print(requests.get(

"http://localhost:8000/", params={"search_term": "rayserve"}

).text)

import requests

print(requests.get(

"http://localhost:8000/", params={"search_term": "rayserve"}

).text)

In [ ]:

Copied!

print(requests.get(

"http://localhost:8000/", params={"search_term": "war"}

).text)

print(requests.get(

"http://localhost:8000/", params={"search_term": "war"}

).text)

In [ ]:

Copied!

print(requests.get(

"http://localhost:8000/", params={"search_term": "physicist"}

).text)

print(requests.get(

"http://localhost:8000/", params={"search_term": "physicist"}

).text)